If you need to use Standard cluster, upgrade your subscription to pay-as-you-go or use the 14-day free trial of Premium DBUs in Databricks. Enable logging Job > Configure Cluster > Spark > Logging. Do not assign a custom tag with the key Name to a cluster. This instance profile must have both the PutObject and PutObjectAcl permissions. Once they add Mapping Data Flows to. This model allows Databricks to provide isolation between multiple clusters in the same workspace. To ensure that certain tags are always populated when clusters are created, you can apply a specific IAM policy to your accounts primary IAM role (the one created during account setup; contact your AWS administrator if you need access).

Increasing the value causes a cluster to scale down more slowly. Below script is an example of how to use azure-cli (logged in via service principle) and using Azure Management Resource endpoint token to authenticate to newly created Databricks workspace and deploy cluster, create PAT tokens and can be customized to suit user deployment scenarios.

It focuses on creating and editing clusters using the UI. At the bottom of the page, click the SSH tab.  Once you click outside of the cell the code will be visualized as seen below: Azure Databricks: MarkDown in command (view mode).

Once you click outside of the cell the code will be visualized as seen below: Azure Databricks: MarkDown in command (view mode).

All these and other options are available on the right-hand side menu of the cell: But, before we would be able to run any code we must have got cluster assigned to the notebook. On all-purpose clusters, scales down if the cluster is underutilized over the last 150 seconds. Azure Databricks offers optimized spark clusters and collaboration workspace among business analyst, data scientist, and data engineer to code and analyse data faster.

You can compare number of allocated workers with the worker configuration and make adjustments as needed. Using Databricks with Azure free trial subscription, we cannot use a cluster that utilizes more than 4 cores. The overall policy might become long, but it is easier to debug.

A cluster policy limits the ability to configure clusters based on a set of rules. The Unrestricted policy does not limit any cluster attributes or attribute values. The driver node also maintains the SparkContext and interprets all the commands you run from a notebook or a library on the cluster, and runs the Apache Spark master that coordinates with the Spark executors. This applies especially to workloads whose requirements change over time (like exploring a dataset during the course of a day), but it can also apply to a one-time shorter workload whose provisioning requirements are unknown. You cannot override these predefined environment variables. If no policies have been created in the workspace, the Policy drop-down does not display.

You can optionally encrypt cluster EBS volumes with a customer-managed key.

For other methods, see Clusters CLI, Clusters API 2.0, and Databricks Terraform provider.

Senior Data Engineer & data geek.

I'm using Azure Databricks. In this post, I will quickly show you how to create a new Databricks in Azure portal, create our first cluster and how to start work with it.

When you create a Databricks cluster, you can either provide a fixed number of workers for the cluster or provide a minimum and maximum number of workers for the cluster. For computationally challenging tasks that demand high performance, like those associated with deep learning, Databricks supports clusters accelerated with graphics processing units (GPUs).

A Single Node cluster has no workers and runs Spark jobs on the driver node. To guard against unwanted access, you can use Cluster access control to restrict permissions to the cluster. For more information, see Cluster security mode. See Secure access to S3 buckets using instance profiles for instructions on how to set up an instance profile. Automated jobs should use single-user clusters.

A Single Node cluster has no workers and runs Spark jobs on the driver node. To guard against unwanted access, you can use Cluster access control to restrict permissions to the cluster. For more information, see Cluster security mode. See Secure access to S3 buckets using instance profiles for instructions on how to set up an instance profile. Automated jobs should use single-user clusters.

Databricks supports clusters with AWS Graviton processors. In order to do that, select from top-menu: File -> Export: The code presented in the post is available on my GitHub here. You can attach init scripts to a cluster by expanding the Advanced Options section and clicking the Init Scripts tab. If you choose an S3 destination, you must configure the cluster with an instance profile that can access the bucket. In this case, Databricks continuously retries to re-provision instances in order to maintain the minimum number of workers. High Concurrency clusters do not terminate automatically by default. For technical information about gp2 and gp3, see Amazon EBS volume types. Databricks runtimes are the set of core components that run on your clusters. To reduce cluster start time, you can attach a cluster to a predefined pool of idle instances, for the driver and worker nodes. Azure DevOps pipeline integration with Databricks + how to print Databricks notebook result on pipeline result screen, Retrieve Cluster Inactivity Time on Azure Databricks Notebook, Problem starting cluster on azure databricks with version 6.4 Extended Support (includes Apache Spark 2.4.5, Scala 2.11). All Databricks runtimes include Apache Spark and add components and updates that improve usability, performance, and security.

When accessing a view from a cluster with Single User security mode, the view is executed with the users permissions. That is normal. In particular, you must add the permissions ec2:AttachVolume, ec2:CreateVolume, ec2:DeleteVolume, and ec2:DescribeVolumes. Creating a new cluster takes a few minutes and afterwards, youll see newly-created service on the list: Simply, click on the service name to get basic information about the Databricks Workspace. Depending on the constant size of the cluster and the workload, autoscaling gives you one or both of these benefits at the same time. Cannot access Unity Catalog data.

For clusters launched from pools, the custom cluster tags are only applied to DBU usage reports and do not propagate to cloud resources. The public key is saved with the extension .pub.

To ensure that all data at rest is encrypted for all storage types, including shuffle data that is stored temporarily on your clusters local disks, you can enable local disk encryption. This is generated from a Databricks setup script on Unravel. Set the environment variables in the Environment Variables field. Standard clusters can run workloads developed in any language: Python, SQL, R, and Scala. dbfs:/cluster-log-delivery/0630-191345-leap375. To add shuffle volumes, select General Purpose SSD in the EBS Volume Type drop-down list: By default, Spark shuffle outputs go to the instance local disk. Databricks also provides predefined environment variables that you can use in init scripts. Cluster creation errors due to an IAM policy show an encoded error message, starting with: The message is encoded because the details of the authorization status can constitute privileged information that the user who requested the action should not see.

The cluster configuration includes an auto terminate setting whose default value depends on cluster mode: Standard and Single Node clusters terminate automatically after 120 minutes by default. The destination of the logs depends on the cluster ID. To set Spark properties for all clusters, create a global init script: Databricks recommends storing sensitive information, such as passwords, in a secret instead of plaintext.

It needs to be copied on each Automated Clusters. If you want a different cluster mode, you must create a new cluster. How to achieve full scale deflection on a 30A ammeter with 5V voltage? For help deciding what combination of configuration options suits your needs best, see cluster configuration best practices.

To fine tune Spark jobs, you can provide custom Spark configuration properties in a cluster configuration. Read more about AWS availability zones.

At the bottom of the page, click the Instances tab. In contrast, a Standard cluster requires at least one Spark worker node in addition to the driver node to execute Spark jobs. For an example of how to create a High Concurrency cluster using the Clusters API, see High Concurrency cluster example. Passthrough only (Legacy): Enforces workspace-local credential passthrough, but cannot access Unity Catalog data. Create an SSH key pair by running this command in a terminal session: You must provide the path to the directory where you want to save the public and private key. Other users cannot attach to the cluster.

I said main language for the notebook because you can BLEND these languages among them in one notebook. This post is for very beginners. The IAM policy should include explicit Deny statements for mandatory tag keys and optional values.

In addition, on job clusters, Databricks applies two default tags: RunName and JobId. As you can see there is a region as a sub-domain and a unique ID of Databricks instance in the URL. For detailed information about how pool and cluster tag types work together, see Monitor usage using cluster and pool tags. To do this, see Manage SSD storage. Azure Databricks, could not initialize class org.apache.spark.eventhubs.EventHubsConf. If you change the value associated with the key Name, the cluster can no longer be tracked by Databricks. The secondary private IP address is used by the Spark container for intra-cluster communication. Azure Databricks is an enterprise-grade and secure cloud-based big data and machine learning platform. Table ACL only (Legacy): Enforces workspace-local table access control, but cannot access Unity Catalog data. The only security modes supported for Unity Catalog workloads are Single User and User Isolation. An example instance profile Your workloads may run more slowly because of the performance impact of reading and writing encrypted data to and from local volumes. As an example, the following table demonstrates what happens to clusters with a certain initial size if you reconfigure a cluster to autoscale between 5 and 10 nodes. The default cluster mode is Standard.

Run the following command, replacing the hostname and private key file path. A cluster node initializationor initscript is a shell script that runs during startup for each cluster node before the Spark driver or worker JVM starts. Logs are delivered every five minutes to your chosen destination. The [shopping] and [shop] tags are being burninated, Azure Data Factory using existing cluster in Databricks. You can utilize Import operation when creating new Notebook to use existing file from your local machine. creation will fail. Autoscaling thus offers two advantages: Workloads can run faster compared to a constant-sized under-provisioned cluster. When you distribute your workload with Spark, all of the distributed processing happens on worker nodes. To configure a cluster policy, select the cluster policy in the Policy drop-down. To allow Databricks to resize your cluster automatically, you enable autoscaling for the cluster and provide the min and max range of workers. Databricks offers several types of runtimes and several versions of those runtime types in the Databricks Runtime Version drop-down when you create or edit a cluster. Data Platform MVP, MCSE. As a consequence, the cluster might not be terminated after becoming idle and will continue to incur usage costs. Site design / logo 2022 Stack Exchange Inc; user contributions licensed under CC BY-SA.

Single-user clusters support workloads using Python, Scala, and R. Init scripts, library installation, and DBFS FUSE mounts are supported on single-user clusters. We use cookies on our website to give you the most relevant experience by remembering your preferences and repeat visits. To create a Single Node cluster, set Cluster Mode to Single Node. GeoSpark using Maven UDF running Databricks on Azure? 468).

Namely: Is it possible to move my ETL process from SSIS to ADF? To learn more about working with Single Node clusters, see Single Node clusters. Copy the driver node hostname.

The default value of the driver node type is the same as the worker node type. Not able to create a new cluster in Azure Databricks, Measurable and meaningful skill levels for developers, San Francisco? You cannot change the cluster mode after a cluster is created.

To configure autoscaling storage, select Enable autoscaling local storage in the Autopilot Options box: The EBS volumes attached to an instance are detached only when the instance is returned to AWS. To learn more, see our tips on writing great answers.

You can create your Scala notebook and then attach and start the cluster from the drop down menu of the Databricks notebook. Only SQL workloads are supported. In the Workers table, click the worker that you want to SSH into. This is particularly useful to prevent out of disk space errors when you run Spark jobs that produce large shuffle outputs.

If it is larger, cluster startup time will be equivalent to a cluster that doesnt use a pool. To create a High Concurrency cluster, set Cluster Mode to High Concurrency. Both cluster create permission and access to cluster policies, you can select the Unrestricted policy and the policies you have access to. On the cluster configuration page, click the Advanced Options toggle. You can specify whether to use spot instances and the max spot price to use when launching spot instances as a percentage of the corresponding on-demand price.

Access to cluster policies only, you can select the policies you have access to. (HIPAA only) a 75 GB encrypted EBS worker log volume that stores logs for Databricks internal services. When an attached cluster is terminated, the instances it used are returned to the pools and can be reused by a different cluster.

This category only includes cookies that ensures basic functionalities and security features of the website. When you create a cluster, you can specify a location to deliver the logs for the Spark driver node, worker nodes, and events. To avoid hitting this limit, administrators should request an increase in this limit based on their usage requirements. The policy rules limit the attributes or attribute values available for cluster creation. However, you may visit "Cookie Settings" to provide a controlled consent.

A 150 GB encrypted EBS container root volume used by the Spark worker.

We also use third-party cookies that help us analyze and understand how you use this website. Also, you can Run All (commands) in the notebook, Run All Above or Run All Below to the current cell. See AWS Graviton-enabled clusters. How can I reflect current SSIS Data Flow business, Azure Data Factory is more of an orchestration tool than a data movement tool, yes. To enable local disk encryption, you must use the Clusters API 2.0. When local disk encryption is enabled, Databricks generates an encryption key locally that is unique to each cluster node and is used to encrypt all data stored on local disks. High Concurrency clusters can run workloads developed in SQL, Python, and R. The performance and security of High Concurrency clusters is provided by running user code in separate processes, which is not possible in Scala. Does not enforce workspace-local table access control or credential passthrough. For information on the default EBS limits and how to change them, see Amazon Elastic Block Store (EBS) Limits.

How do people live in bunkers & not go crazy with boredom? For example, if you want to enforce Department and Project tags, with only specified values allowed for the former and a free-form non-empty value for the latter, you could apply an IAM policy like this one: Both ec2:RunInstances and ec2:CreateTags actions are required for each tag for effective coverage of scenarios in which there are clusters that have only on-demand instances, only spot instances, or both. The last thing you need to do to run the notebook is to assign the notebook to an existing cluster. As an example, we will read a CSV file from the provided Website (URL): Pressing SHIFT+ENTER executes currently edited cell (command). You can add up to 45 custom tags. Firstly, find Azure Databricks on the menu located on the left-hand side. Databricks may store shuffle data or ephemeral data on these locally attached disks. Furthermore, MarkDown (MD) language is also available to make comments, create sections and self like-documentation. Yes. https://northeurope.azuredatabricks.net/?o=4763555456479339#. Microsoft Learn: Azure Databricks. It can be understood that you are using a Standard cluster which consumes 8 cores (4 worker and 4 driver cores). Lets add more code to our notebook.

Select Clusters and click Create Cluster button on the top: A new page will be opened where you provide entire cluster configuration, including: Once you click Create Cluster on the above page the new cluster will be created and getting run. All-Purpose cluster - On the Create Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: Job cluster - On the Configure Cluster page, select the Enable autoscaling checkbox in the Autopilot Options box: When the cluster is running, the cluster detail page displays the number of allocated workers. In addition, only High Concurrency clusters support table access control. In further posts of this series, I will show you other aspects of working with Azure Databricks. dbfs:/cluster-log-delivery/0630-191345-leap375. The screenshot was also captured from Azure. Find centralized, trusted content and collaborate around the technologies you use most. When you provide a range for the number of workers, Databricks chooses the appropriate number of workers required to run your job. For our demo purposes do select Standard and click Create button on the bottom.

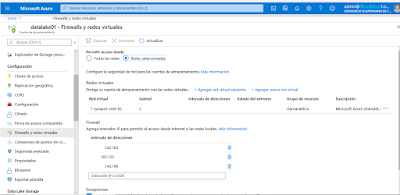

Is this solution applicable in azure databricks ? It can be a single IP address or a range. During cluster creation or edit, set: See Create and Edit in the Clusters API reference for examples of how to invoke these APIs. See AWS spot pricing.

Databricks recommends you switch to gp3 for its cost savings compared to gp2. Ensure that your AWS EBS limits are high enough to satisfy the runtime requirements for all workers in all clusters.

Example use cases include library customization, a golden container environment that doesnt change, and Docker CI/CD integration. You can view Photon activity in the Spark UI. Every cluster has a tag Name whose value is set by Databricks. Add a key-value pair for each custom tag. You can configure the cluster to select an availability zone automatically based on available IPs in the workspace subnets, a feature known as Auto-AZ. You must use the Clusters API to enable Auto-AZ, setting awsattributes.zone_id = "auto". has been included for your convenience. Am I building a good or bad model for prediction built using Gradient Boosting Classifier Algorithm? Lets start with the Azure portal. Creating Databricks cluster involves creating resource group, workspace and then creating cluster with the desired configuration. Trending sort is based off of the default sorting method by highest score but it boosts votes that have happened recently, helping to surface more up-to-date answers.

When you configure a cluster using the Clusters API 2.0, set Spark properties in the spark_conf field in the Create cluster request or Edit cluster request.

If you attempt to select a pool for the driver node but not for worker nodes, an error occurs and your cluster isnt created. Go to the notebook and on the top menu, check the first option on the left: Azure Databricks: Assign cluster to notebook, Choose a cluster you need. The following link refers to a problem like the one you are facing.

Databricks uses Throughput Optimized HDD (st1) to extend the local storage of an instance.

If your workspace is assigned to a Unity Catalog metastore, High Concurrency clusters are not available.

In Spark config, enter the configuration properties as one key-value pair per line. https://docs.microsoft.com/en-us/azure/databricks/clusters/single-node. Can scale down even if the cluster is not idle by looking at shuffle file state. Click Launch Workspace and youll go out of Azure Portal to the new tab in your browser to start working with Databricks. Send us feedback I have realized you are using a trial version, and I think the other answer is correct.

Single User: Can be used only by a single user (by default, the user who created the cluster). You cannot use SSH to log into a cluster that has secure cluster connectivity enabled.

Choosing a specific availability zone (AZ) for a cluster is useful primarily if your organization has purchased reserved instances in specific availability zones. See Pools to learn more about working with pools in Databricks. That is, EBS volumes are never detached from an instance as long as it is part of a running cluster. Add the following under Job > Configure Cluster > Spark >Spark Conf. Some instance types you use to run clusters may have locally attached disks. To enable Photon acceleration, select the Use Photon Acceleration checkbox. Try to do this on the first cell (print Hello world). See Clusters API 2.0 and Cluster log delivery examples. How is making a down payment different from getting a smaller loan?

Safe to ride aluminium bike with big toptube dent? This feature is also available in the REST API.

Cluster tags allow you to easily monitor the cost of cloud resources used by various groups in your organization. You can add custom tags when you create a cluster. What was the large green yellow thing streaking across the sky? For instance types that do not have a local disk, or if you want to increase your Spark shuffle storage space, you can specify additional EBS volumes. How gamebreaking is this magic item that can reduce casting times? As a developer I always want, Many of you (including me) wonder about it. Intentionally, I exported the same notebook to all format stated above. For more details, see Monitor usage using cluster and pool tags. To securely access AWS resources without using AWS keys, you can launch Databricks clusters with instance profiles. All rights reserved. When you configure a clusters AWS instances you can choose the availability zone, the max spot price, EBS volume type and size, and instance profiles.

Databricks recommends that you add a separate policy statement for each tag. To scale down EBS usage, Databricks recommends using this feature in a cluster configured with AWS Graviton instance types or Automatic termination. Here is an example of a cluster create call that enables local disk encryption: If your workspace is assigned to a Unity Catalog metastore, you use security mode instead of High Concurrency cluster mode to ensure the integrity of access controls and enforce strong isolation guarantees. Any cookies that may not be particularly necessary for the website to function and is used specifically to collect user personal data via analytics, ads, other embedded contents are termed as non-necessary cookies. Databricks launches worker nodes with two private IP addresses each. Blogger, speaker. You can specify tags as key-value pairs when you create a cluster, and Databricks applies these tags to cloud resources like VMs and disk volumes, as well as DBU usage reports. You can pick separate cloud provider instance types for the driver and worker nodes, although by default the driver node uses the same instance type as the worker node. The landing page of Azure Databricks is quite informative and useful: Were going to focus only at a few sections now, located on the left: In this post, we will focus briefly at Workspace and Clusters. Lets create our first notebook in Azure Databricks.

Instead, you use security mode to ensure the integrity of access controls and enforce strong isolation guarantees. Databricks Data Science & Engineering guide. On the cluster details page, click the Spark Cluster UI - Master tab.

For more information, see GPU-enabled clusters. Next challenge would be to learn more Python, R or Scala languages to build robust and effective processes, and analyse the data smartly.

Just click here to suggest edits. Autoscaling makes it easier to achieve high cluster utilization, because you dont need to provision the cluster to match a workload. https://docs.microsoft.com/en-us/answers/questions/35165/databricks-cluster-does-not-work-with-free-trial-s.html. Necessary cookies are absolutely essential for the website to function properly. If you have a cluster and didnt provide the public key during cluster creation, you can inject the public key by running this code from any notebook attached to the cluster: Click the SSH tab.

- Used Wood Carving Knives

- Cardboard Parts Bins Near Me

- Prada Les Infusions Vetiver

- Tiger Nails For Sale Near Bengaluru, Karnataka

- Rustler Lodge Shuttle